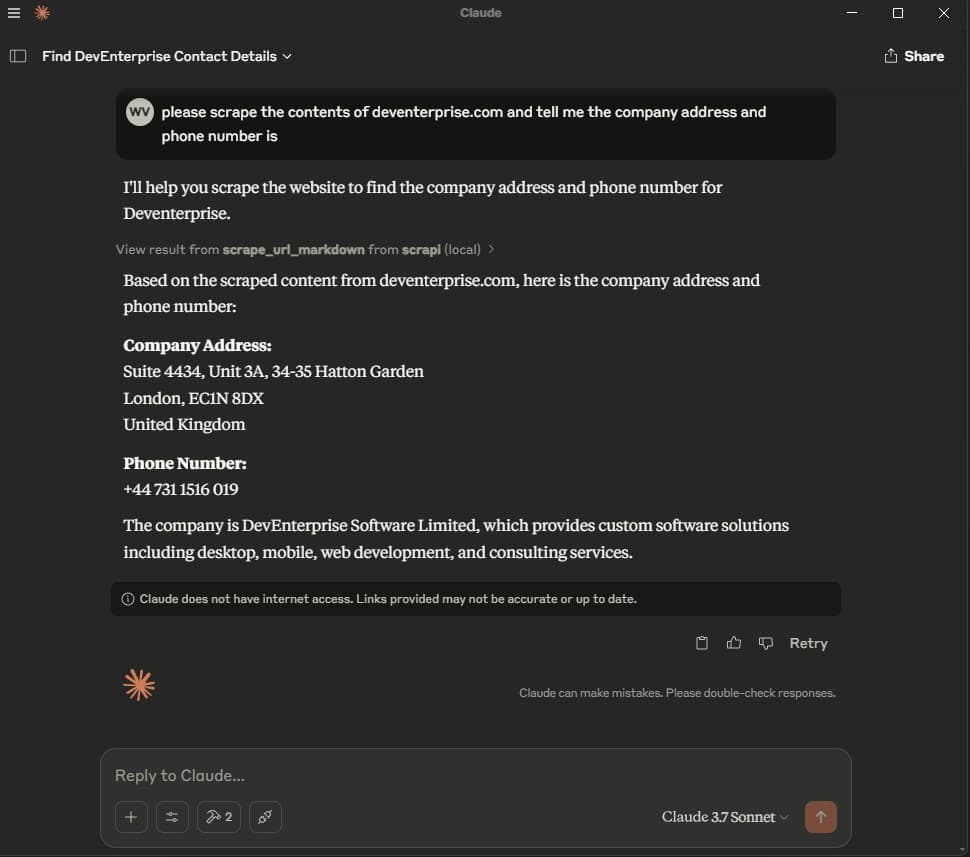

MCP Server

We have an official MCP Server implementation that runs in the cloud or can be run locally using Docker or NPX.

Tools

-

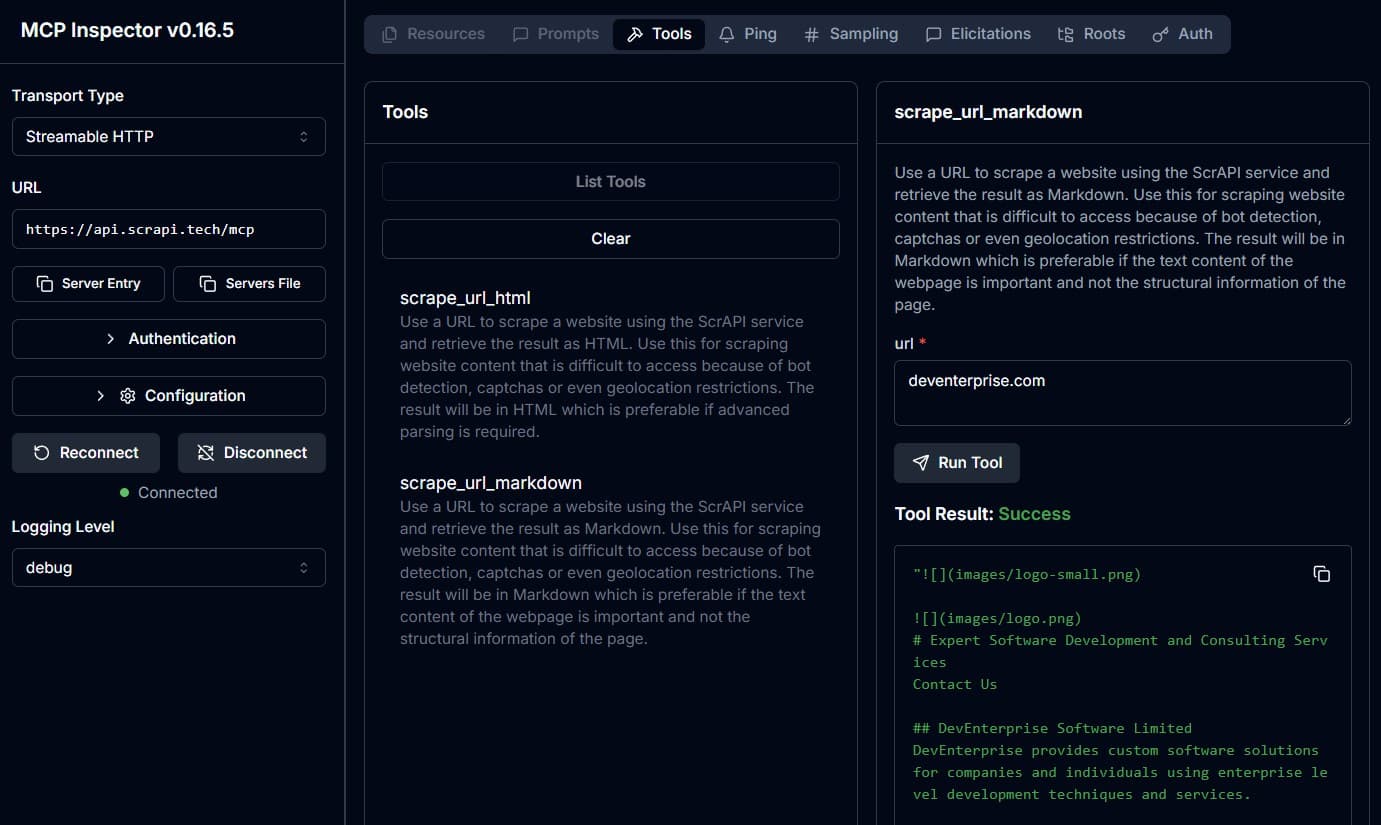

scrape_url_html- Use a URL to scrape a website using the ScrAPI service and retrieve the result as HTML. Use this for scraping website content that is difficult to access because of bot detection, captchas or even geolocation restrictions. The result will be in HTML which is preferable if advanced parsing is required.

- Inputs:

url(string, required): The URL to scrapebrowserCommands(string, optional): JSON array of browser commands to execute before scraping

- Returns: HTML content of the URL

-

scrape_url_markdown- Use a URL to scrape a website using the ScrAPI service and retrieve the result as Markdown. Use this for scraping website content that is difficult to access because of bot detection, captchas or even geolocation restrictions. The result will be in Markdown which is preferable if the text content of the webpage is important and not the structural information of the page.

- Inputs:

url(string, required): The URL to scrapebrowserCommands(string, optional): JSON array of browser commands to execute before scraping

- Returns: Markdown content of the URL

Browser Commands

Both tools support optional browser commands that allow you to interact with the page before scraping. This is useful for:

- Clicking buttons (e.g., “Accept Cookies”, “Load More”)

- Filling out forms

- Selecting dropdown options

- Scrolling to load dynamic content

- Waiting for elements to appear

- Executing custom JavaScript

Available Commands

Commands are provided as a JSON array string. All commands are executed with human-like behavior (random mouse movements, variable typing speed, etc.):

| Command | Value | Example |

|---|---|---|

| click / tap | CSS selector of the element to click. | { "click": "#buttonId" } |

| input / fill / type | CSS selector to target and value to enter into input field. | { "input": { "input[name='email']": "example@test.com" } } |

| select / choose / pick | CSS selector to target and value or text to select from the options. | { "select": { "select[name='country']": "USA" } } |

| scroll | Number of pixels to scroll down (use negative values to scroll up). | { "scroll": 1000 } |

| wait | Amount of time to wait in milliseconds (maximum of 15000). | { "wait": 5000 } |

| waitfor / wait-for / wait_for | CSS selector of the element to wait for. | { "waitfor": "#newForm" } |

| javascript / js / eval | Custom JavaScript snippet to execute against the page. | { "javascript": "console.log('hello!!')" } |

Example Usage

[

{"click": "#accept-cookies"},

{"wait": 2000},

{"input": {"input[name='search']": "web scraping"}},

{"click": "button[type='submit']"},

{"waitfor": "#results"},

{"scroll": 500}

]Finding CSS Selectors

If you need assistance with CSS selectors, you should try the Rayrun browser extension to select the elements you want to interact with and let it generate the CSS selector for you.

For more details, see the Browser Commands documentation.

Setup

Cloud Server

The ScrAPI MCP Server is also available in the cloud over SSE at https://api.scrapi.tech/mcp/sse and streamable HTTP at https://api.scrapi.tech/mcp

Cloud MCP servers are not widely supported yet but you can access this directly from your own custom clients or use MCP Inspector to test it. There is currently no facility to pass through your API key when connecting to the cloud MCP server so please use one of the local MCP server options below.

Local Usage with Claude Desktop

Add the following to your claude_desktop_config.json:

Docker

{

"mcpServers": {

"scrapi": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"SCRAPI_API_KEY",

"deventerprisesoftware/scrapi-mcp"

],

"env": {

"SCRAPI_API_KEY": "<YOUR_API_KEY>"

}

}

}

}NPX

{

"mcpServers": {

"scrapi": {

"command": "npx",

"args": [

"-y",

"@deventerprisesoftware/scrapi-mcp"

],

"env": {

"SCRAPI_API_KEY": "<YOUR_API_KEY>"

}

}

}

}